Continuing this series (getting these written much faster than planned) next we'll be discussing using the Jenkins continuous integration tool as a text manager for Sikuli tests. This is where a large amount of my personal work has gone in developing the Sikuli testing framework I've been describing. The majority of the Jenkins integration is handled using a shell script that I call testrunner. What this does is deal with the administrative tasks of running the sikuli tests these include:

- Jenkins integration

- getting the latest versions of the application for testing

- starting up the application (see the post on iOS testing for more detail about this)

- setting up the test environment

- running the tests and recording results and logs and dealing with test failures

- clean up and reporting

In this post I'm going to attempt to cover each of the items above in enough depth to give you a feel for what I'm doing. Generally speaking what you'll need to do will probably be different but this should give you a place to start and some techniques for dealing with the problems that come up. I should mention that this is going to be a more "unix" than "sikuli" post and that I actually make use of a variety of tools for managing this test harness. If you haven't gotten this already... I'm a big fan of the unix tool set and use it extensively in my work. This lets me continuously build on the work I've done before which I find provides me a lot more leverage as a developer than coding everything from scratch or being a slave to a single tool.

Jenkins integration

The Jenkins integration itself is quite simple. I started by creating a free-style project and then added two "Execute shell" tasks two it. The first does a clone of my sikuli project from git. And the second generates a list of tests to run (by parsing the list of tests and breaking it into chunks) and then executes the test runner with that list.

Here's the code:

set +e

LEN=$(cat sikuli/tests/testscripts.txt | wc -l)

#echo $LEN

WINDOWS=$(expr $LEN / 5)

if [[ $(expr $LEN % 5) -gt 0 ]]

then

WINDOWS=$(expr $WINDOWS + 1)

fi

#echo $WINDOWS

W=$(expr $BUILD_NUMBER % $WINDOWS)

echo "WINDOW: $W"

OFFSET=$(expr $W \\* 5 + 1)

OFFSET2=$(expr $OFFSET + 4)

echo "OFFSETS: $OFFSET $OFFSET2"

TESTS=$(eval sed -n '${OFFSET},${OFFSET2}p' sikuli/tests/testscripts.txt)

echo "TESTS: ${TESTS}"

set -e

bash sikuli/testrunner.sh ${TESTS}

It's not all that exciting or ground breaking... but if you've got a lot of tests this is a good way to limit the amount of lost testing time when a test gets stuck or other "bad things" happen.

The job is also set up to trigger itself when it finishes so that these run forever (or until you manually abort the job). It is also configured to gather up a bunch of log files (*.log) as build artifacts so they can be analyzed later. Most of the interesting results are captured as test output (discussed further below).

Getting the latest version of the application

If you don't have a build process for your application... you should have one. And you should keep an archive of your old builds. For iOS we keep at minimum the release builds that we submit to Apple since you need these for doing upgrade testing as well as doing crash symbolication if you get crash report from Apple (or use a service like HockeyApp. At the start of each test run I use wget to grab a fresh copy of our application (and some other pre-compiled bits... specifically the slowmo disabling tool, the current version of sikuli that I'm using (this way I don't have to worry about what version is installed on a Jenkins build host (I have multiple ones and we plan to do distributed testing at some point in the future...), and the iphonesim tool.

So for Sikuli this looks like:

wget -r -nH -q --cut-dirs=1 --no-parent --reject "index.html*" http://example.com/util/Sikuli-IDE.app/

chmod -R a+x Sikuli-IDE.app

''URLs have been change since I don't really want to publicize my servers...''

Starting the App in the Simulator

There are a couple of steps to this... the first is to make sure that the iOS simulator is not running. You can't start multiple copies at the same time so I have a check for whether it is running and I kill it if it already is.

SIM_PID=`ps x | grep "iPhone Simulator " | grep -v grep | awk '{print $1}'`

if [ "$SIM_PID" == "" ]

then

echo "iPhone Simulator.app is not running"

else

echo "iPhone Simulator.app is running let's kill it"

kill -9 "$SIM_PID"

fi

We then start up the simulator and our application using iphonesim:

#APPLOC has the absolute path to the app we downloaded using wget

./iphonesim launch "$APPLOC" &

SIM_PID=""

SIMSTARTTIMER=0

while [ "$SIM_PID" == "" ]

do

SIM_PID=`ps x | grep "iPhone Simulator " | grep -v grep | awk '{print $1}'`

if [ "$SIM_PID" == "" ]

then

echo "iPhone Simulator.app is still not running"

if [ "$SIMSTARTTIMER" == "30" ]

then

echo "The simulator never started!"

exit 1

fi

SIMSTARTTIMER=$(( $SIMSTARTTIMER + 1 ))

sleep 1

else

echo "iPhone Simulator.app is running we can continue now"

fi

done

Since the simulator can take a variable amount of time to launch... we actually have some timing code to check if it actually started. If it hasn't started after 30 seconds we terminate the build run and return a non-zero return code (error). If not we continue and apply the slowmo patch (see iOS Testing post for details).

Setting up testing environment

Next we perform some checks and get the environment into a consistent state. Since our application involves user accounts we have a set reserved for the Sikuli tests and these accounts interact with one another during the tests. So we always start in the same place we have a sikuli script which performs a login using the primary account. This gets run before we start any testing. We also have some other scripts which make sure that all of the accounts have their activity cleared. These clean up scripts used to be written with sikuli but we were finding them slow and unreliable. We recently replaced these with some network only management code based on jMeter, but this is seriously out of scope for this post.

But this is what we do to run the login script (briliantly called firstlogin. ;-):

java -jar "${WORKSPACE}/Sikuli-IDE.app/Contents/Resources/Java/sikuli-script.jar" -s "${WORKSPACE}/sikuli/meta/firstlogin.sikuli" }}}

This shows how to do a simple start of a sikuli script from the command line. The ${WORKSPACE} variable is something that Jenkins sets which tells you the directory where the test is running. When actually running the tests there's a bit more complexity since we want to log the output, and deal with issues like the test getting stuck. More on this in a moment.

Running the tests

Not mentioned earlier the testrunner can run in two modes. If you don't pass it any command line arguments it grabs a file with a list of the "working" tests and starts running them all. You can also pass it a list of tests and it'll run just those tests. This second mode was created to make doing spot tests easier and for testing the test runner itself.* The script then iterates through the list of tests and tracks information on the amount of time it takes to run the test, log output from sikuli and log output from the simulator. Once the test finishes running the logs are analyzed to check for whether the test passed or failed. The results are stashed into an XML file which is later used to report results back to Jenkins (making use of its test result display capabilities). If the test failed the system also attempts to clean up after itself by clearing the accounts and trying to get the simulator back to a known place in the application (using a sikuli script called backout we'll discuss this further in the post about error handling as well).

This is the really interesting part of the script and the part that took the most effort to develop.

(("${WORKSPACE}/timeout3" -t 720 java -jar "${WORKSPACE}/Sikuli-IDE.app/Contents/Resources/Java/sikuli-script.jar" -s "${WORKSPACE}/sikuli/tests/$test" 2>&1 1>&3 | tee "${WORKSPACE}/${BUILD_NUMBER}.${NAME_CLEAN}.errors.log") 3>&1 1>&2 | tee "${WORKSPACE}/${BUILD_NUMBER}.${NAME_CLEAN}.output.log") > "${WORKSPACE}/${BUILD_NUMBER}.${NAME_CLEAN}.final.log" 2>&1

RETURN=$?

ISTERMINATED=$(grep TERMINATED "${WORKSPACE}/${BUILD_NUMBER}.${NAME_CLEAN}.final.log")

echo ISTERMINTATED: $ISTERMINATED

if [ "TERMINATED" == "$ISTERMINATED" ]

then

echo "TERMINATED" >> "${WORKSPACE}/${BUILD_NUMBER}.${NAME_CLEAN}.errors.log"

fi

What this crazy convoluted bit of shell code does is get the various outputs of the application going into separate files. One for the standard error, another for standard out and another for the combined log. All of this is executed within the purview of timeout3 a bash script which sets up a sane timeout capability (timeout3 bash cookbook). What this then allows me to do is deal with situations where sikuli has gotten stuck in a loop or completely failed but in a way that doesn't terminate sikuli. Once sikuli exists we parse the logs and fill out the results. If the test failed we grab the error from sikuli and jam it into the results XML. We then run the backout scripts with a similar wrapper as the tests so we can catch timeout and log information about how much time we're spending dealing with test failures. Gathering information about how much time your spending on various tests is critical to see where you can improve speed**.

If you're not familiar with bash here's a quick way of finding out how long something took to execute:

START=`date +%s`

echo START: $START

sleep 5

#replace with the work you want to time

END=`date +%s`

SECONDS=$(($END - $START ))

echo END: $END

echo SECONDS: $SECONDS

I've attached a sample test results file that you can peruse if you're curious about the structure. This one shows 3 timed out tests. One passing and failing test so you can get an idea of what you'd need to generate. I currently generate these using bash's here documents. But I've been considering reworking this to a system based around xmlstarlet where the complete document is created to start with all tests marked as being skipped and then the details are filled in as the tests are run. This should behave a bit better in situations where the test run fails for some reason before reaching the end. With the current set up if the test run fails for any reason you lose all the results up to that point which is less than ideal.

*And more recently has become the primary mode of running tests. With over 100 tests your chances of getting a test failure that takes down the entire testing framework starts to get very high... so we now run all the tests in small batches (5 tests at a time) and aggregate the results. I'll discuss this further in a post about error handling.

**It might also be interesting to create some sort of profiling layer for our shared test bits in the metasikuli script and see how fast the various parts run and how often we run them. But that's another project for another day.

Cleanup and Reporting

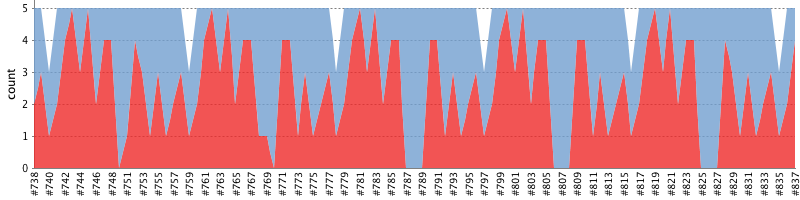

Clean up is pretty straight forward. As we do at the start start of the test run we kill off the simulator and reset the account data. Currently the system just relies on Jenkin's artifact system and the xUnit plugin for gathering up the log data and logging the test reports. These are then displayed inside Jenkins in a simple chart that looks like this:

Since moving to segmented test runs this chart isn't quite as useful as it used to be since each test run only deals with 5 tests. So one of my upcoming projects is to work on a new tool to aggregate the results into a comprehensive charts which will give an over view of the whole system. Breaking the tests up like this was inspired by my interactions with the Mozilla Project's Tinderbox tool from back in the early days of Mozilla (back when they were still calling it Firebird).

Conclusion

So that's the basic approach. If you have questions about the details or would like more information feel free to post a comment or get in contact with me. I'm more than happy to answer questions and don't mind updating posts with additional details if desired.

Our application's simulator build generates an extremely detailed activity log which I use for other testing purposes. This might be an interesting topic for another post about server testing.